OpenAI’s ChatGPT represents a significant leap in AI technology. This highly sophisticated chatbot, fueled by the GPT-3 language model, is now accessible to a global audience.

ChatGPT stands out as an intelligent conversational tool, having been trained on a comprehensive range of data. This makes it exceptionally adaptable, capable of addressing myriad challenges across a spectrum of fields.

This guide aims to instruct you on utilizing ChatGPT to construct effective Python web scrapers. Additionally, we will provide essential tips and techniques to refine and elevate the caliber of your scraper’s programming.

Let’s embark on an exploration of using ChatGPT for web scraping, uncovering its potential and practical applications.

Implementing Web Scraping via ChatGPT

This tutorial will guide you through the process of extracting a list of books from goodreads.com. We’ll present a visual representation of the website’s page layout to aid your understanding.

Next, we outline the critical steps necessary to harvest data using ChatGPT effectively.

Setting Up a ChatGPT Account

The process of setting up a ChatGPT account is straightforward. Navigate to the ChatGPT Login Page and select the sign-up option. Alternatively, for added convenience, you can opt to sign up using your Google account.

Upon completing the registration, you will gain access to the chat interface. Initiating a conversation is as simple as entering your query or message in the provided text box.

Crafting an Effective Prompt for ChatGPT

When seeking ChatGPT’s assistance in programming tasks such as web scraping, clarity and detail in your prompt are paramount. Explicitly state the programming language, along with any necessary tools or libraries. Additionally, clearly identify specific elements of the web page you intend to work with.

Equally important is to specify the desired outcome of the program and any specific coding standards or requirements that need to be adhered to.

For instance, consider this exemplary prompt requesting the development of a Python web scraper utilizing the BeautifulSoup library.

Craft a web scraper in Python using the BeautifulSoup library.

Target Website: https://www.goodreads.com/list/show/18816.Books_You_Must_Read_

Objective: Extract the names of books and their authors from the specified page.

Here are the required CSS selectors:

1. Book Name: #all_votes > table > tbody > tr:nth-child(1) > td:nth-child(3) > a > span

2. Author Name: #all_votes > table > tbody > tr:nth-child(1) > td:nth-child(3) > span:nth-child(4) > div > a > span

Desired Output: Store the collected Book Names and Author Names in a CSV file.

Additional Requirements: Ensure proper handling of character encoding and the elimination of unwanted symbols in the output CSV.Following this, a suitable code snippet should be generated by ChatGPT.

Evaluating the Generated Code

Once ChatGPT provides the code, it’s crucial to review it thoroughly. Verify that it doesn’t include superfluous libraries and confirm that all necessary packages are available for the code to function correctly.

If you encounter any issues or discrepancies with the code, do not hesitate to reach out to ChatGPT for adjustments or a complete rewrite if necessary.

Implementing Your Scraper

After reviewing, copy the provided code and conduct a trial run to ensure its proper functionality. Here’s an example of how the web scraper code might look.

import requests

from bs4 import BeautifulSoup

import csv

# Define the target URL

url = "https://www.goodreads.com/list/show/18816.Books_You_Must_Read_"

# Send an HTTP GET request to the URL

response = requests.get(url)

# Check if the request was successful

if response.status_code == 200:

soup = BeautifulSoup(response.content, 'html.parser')

book_selector = "a.bookTitle span"

auth_selector = "span[itemprop='author']"

# Find all book names and author names using CSS selectors

book_names = soup.select(book_selector)

auth_names = soup.select(auth_selector)

# Create a list to store the scraped data

book_data = []

# Loop through the book names and author names and store them in the list

for book_name, author_name in zip(book_names, auth_names):

book_name_text = book_name.get_text(strip=True)

auth_name_text = auth_name.get_text(strip=True)

book_data.append([book_name_text, auth_name_text])

# Define the CSV file name

csv_filename = "book_list.csv"

# Write the data to a CSV file

with open(csv_filename, 'w', newline='', encoding='utf-8') as csv_file:

csv_writer = csv.writer(csv_file)

# Write the header row

csv_writer.writerow(["Book Name", "Author Name"])

# Write the book data

csv_writer.writerows(book_data)

print(f"Data has been scraped and saved to {csv_filename}")

else:

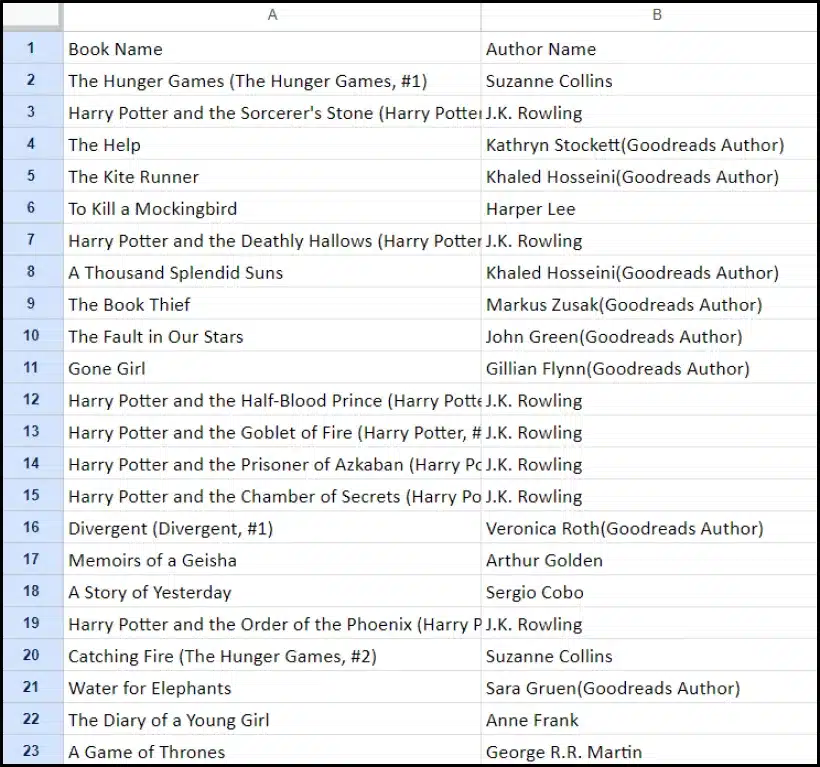

print(f"Failed to retrieve data. Status code: {response.status_code}")The sample output of the scraped data is given below.

Enhancing Your Web Scraping Project with ChatGPT: Advanced Techniques and Considerations

You’ve made significant progress by developing a Python web scraper using BeautifulSoup, as evident in the provided code. This script is an excellent starting point for efficiently harvesting data from the specified Goodreads webpage. Now, let’s delve into some advanced aspects to further enhance your web scraping project.

Optimizing Your Code for Efficiency

Efficient code is vital for successful web scraping, particularly for large-scale tasks. To enhance your scraper’s performance, consider the following strategies:

- Leverage Frameworks and Packages: Seek advice on frameworks and packages that can accelerate web scraping.

- Utilize Caching Techniques: Implement caching to save previously fetched data, reducing redundant network calls.

- Employ Concurrency or Parallel Processing: This approach can significantly speed up data retrieval by handling multiple tasks simultaneously.

- Minimize Unnecessary Network Calls: Focus on fetching only the essential data to optimize network usage.

Handling Dynamic Web Content

Many modern websites use dynamic content generation techniques, often relying on JavaScript. Here are some ways ChatGPT can assist you in navigating such complexities:

- Utilize Headless Browsers: ChatGPT can guide you in using headless browsers for scraping dynamic content.

- Automate User Interactions: Simulated user actions can be automated to interact with web pages that have complex user interfaces.

Code Linting and Editing

Maintaining clean, readable code is crucial. ChatGPT can assist in several ways:

- Suggest Best Practices: ChatGPT can recommend coding standards and practices to enhance readability and efficiency.

- Lint Your Code: Request ChatGPT to ‘lint the code’ for suggestions on tidying up and optimizing your script.

Overcoming Limitations with Proxy Services

While ChatGPT is a powerful tool, it’s essential to acknowledge limitations when scraping web data from sites with stringent security measures. To address challenges like CAPTCHAs and rate-limiting, consider using proxy services such as OneProxy. They offer:

- High-Quality Proxy Pool: Access to a premium pool of proxies with excellent reputation and performance.

- Reliable Data Retrieval: Ensuring your requests are not rate-limited, thus maintaining consistent access to the required data.

Application of OneProxy in Web Scraping

Utilizing OneProxy can significantly enhance your web scraping capabilities. By routing your requests through various proxies, you can:

- Bypass Rate Limiting and CAPTCHAs: OneProxy can help in circumventing common anti-scraping measures.

- Access Accurate and Unlimited Web Data: With a robust proxy network, OneProxy ensures reliable and uninterrupted data access.

By combining the power of ChatGPT with the strategic use of tools like OneProxy and adhering to best practices in coding and web scraping, you can efficiently and effectively gather the data you need from a wide range of web sources.

Conclusion: Unleashing the Power of ChatGPT in Web Scraping

In summary, ChatGPT emerges as a pivotal tool in the realm of web scraping, bringing a multitude of opportunities to the forefront. Its capabilities in generating, refining, and enhancing code are indispensable for both novice and seasoned web scrapers.

ChatGPT’s role in web scraping is not just confined to code generation; it extends to providing insightful tips, handling complex web pages, and even advising on best practices for efficient scraping. As technology evolves, ChatGPT’s contribution to simplifying and advancing web scraping tasks is becoming increasingly vital.

This marks a new era where web scraping, powered by advanced AI tools like ChatGPT, becomes more accessible, efficient, and effective for a wide range of users, from individual hobbyists to large-scale data analysts.

Here’s to successful and innovative scraping endeavors in the future – Happy Scraping!