A cache server is a critical component of modern web infrastructure designed to enhance the performance and efficiency of web services. It stores frequently accessed data temporarily, reducing the need to fetch the same information repeatedly from the original source. By doing so, cache servers significantly speed up data retrieval and improve the overall user experience.

The history of the origin of Cache server and the first mention of it

The concept of caching dates back to the early days of computing when memory and storage were limited. The first mention of caching can be traced to the Multics operating system in the 1960s. It utilized cache memory to store frequently accessed data, reducing the time taken to access information from slower main memory or disk storage.

Over the years, as the internet and web services grew, the need for caching became more apparent. In the 1990s, with the rise of the World Wide Web, web browsers began to implement caching to store web page elements, allowing faster page loads during subsequent visits.

Detailed information about Cache server. Expanding the topic Cache server

A cache server is a specialized hardware or software that stores copies of frequently requested data from the original source to serve future requests more efficiently. When a user accesses a website or requests a particular resource, such as images, videos, or files, the cache server intercepts the request.

If the requested resource is present in the cache, the cache server delivers it directly to the user without needing to fetch it from the original server. This process significantly reduces the latency and bandwidth consumption, as the data travels a shorter distance, leading to faster response times.

Cache servers employ various caching techniques, such as:

- Web Caching: Caching web pages and their associated elements (HTML, CSS, JavaScript) to speed up website loading for users.

- Content Delivery Network (CDN): CDNs are a type of distributed cache server network that stores and delivers content from multiple locations worldwide. CDNs help minimize latency and ensure faster content delivery, especially for geographically dispersed users.

- Database Caching: Caching frequently accessed database queries and results to accelerate data retrieval for applications.

- API Caching: Caching responses from APIs to reduce the overhead on backend servers and improve API response times.

The internal structure of the Cache server. How the Cache server works

The internal structure of a cache server typically involves the following components:

- Cache Store: This is where the cached data is stored. It can be implemented using various storage mediums like RAM, SSDs, or a combination of both, depending on the access speed requirements.

- Cache Manager: The cache manager handles the insertion, eviction, and retrieval of data from the cache store. It uses caching algorithms to determine which items to keep and which to replace when the cache reaches its capacity limit.

- Cache Update Mechanism: The cache server needs to be synchronized with the original server to ensure that it holds the latest version of the data. This is usually done using cache invalidation or cache expiration techniques.

- Cache Control Interface: A cache server often provides an interface or API to manage and control caching behavior, such as configuring cache rules, clearing cache, or purging specific cached items.

The typical workflow of a cache server involves:

- A user requests a resource from a website or application.

- The cache server intercepts the request and checks if the resource is available in its cache store.

- If the resource is found in the cache, the cache server delivers it directly to the user.

- If the resource is not in the cache or has expired, the cache server fetches it from the original server, stores a copy in the cache store, and then delivers it to the user.

- The cache server regularly updates its cache store to ensure data accuracy and relevancy.

Analysis of the key features of Cache server

Cache servers offer several key features that benefit web services and users alike:

- Improved Performance: By reducing data retrieval time, cache servers lead to faster response times, shorter page load times, and overall better user experience.

- Bandwidth Savings: Cached data is served locally, minimizing the need for repeated data transfers between the user and the original server. This reduces bandwidth consumption and costs.

- Lower Server Load: With cache servers handling a significant portion of the requests, the load on the original server decreases, enabling it to focus on other critical tasks.

- Fault Tolerance: Cache servers can act as a buffer during temporary server outages. If the original server goes down, the cache server can continue to serve cached content until the original server is back online.

- Geographic Distribution: CDNs, a type of cache server network, can replicate content across multiple locations globally, ensuring fast and reliable content delivery to users around the world.

Types of Cache server

Cache servers can be categorized based on their purpose and the type of data they cache. Here are some common types:

| Type | Description |

|---|---|

| Web Cache | Stores web page elements (HTML, CSS, JavaScript) to speed up website loading. |

| CDN | Distributed cache servers that deliver content from multiple locations worldwide. |

| Database Cache | Caches frequently accessed database queries and results for faster data retrieval. |

| API Cache | Caches responses from APIs to improve API response times and reduce backend load. |

| Content Cache | Caches multimedia content (images, videos) to reduce load times and bandwidth usage. |

Ways to Use Cache Server:

- Web Acceleration: Cache servers are used to speed up website loading for users, reducing bounce rates and improving SEO rankings.

- Content Distribution: CDNs cache and distribute content to multiple edge locations, ensuring faster and more reliable content delivery.

- Database Performance: Caching frequently accessed database queries can significantly enhance application performance and reduce database load.

Problems and Solutions:

- Stale Cache: Cached data might become outdated or stale. Cache servers employ cache expiration or invalidation techniques to ensure that outdated content is not served to users.

- Cache Invalidation Challenges: When the original data is updated, cache invalidation can be complex, requiring careful management to avoid serving outdated information.

- Cache Size and Eviction Policies: Cache servers have limited storage capacity, and selecting efficient eviction policies is essential to maintain the most relevant data in the cache.

Main characteristics and other comparisons with similar terms

| Characteristic | Cache Server | Load Balancer | Proxy Server |

|---|---|---|---|

| Function | Caching frequently accessed data to speed up retrieval. | Distributing traffic across multiple servers to balance the load. | Acting as an intermediary between clients and servers, forwarding requests. |

| Purpose | Optimize data access times and reduce server load. | Ensure even distribution of traffic, preventing server overload. | Enhance security, privacy, and performance for clients and servers. |

| Type | Software or Hardware. | Typically Software-based. | Software or Hardware. |

| Examples | Varnish, Squid. | HAProxy, NGINX. | Apache, Nginx. |

The future of cache servers is promising, driven by advancements in hardware and software technologies. Some key trends and technologies include:

- Edge Computing: The rise of edge computing will lead to cache servers being deployed closer to end-users, reducing latency and further improving performance.

- Machine Learning: Cache servers can leverage machine learning algorithms to predict user behavior and proactively cache data, enhancing cache hit rates.

- Immutable Caching: Immutable caching ensures that cached content remains unchanged, addressing cache consistency challenges.

- Real-Time Data Caching: Caching real-time data streams will become crucial for applications like IoT, where low latency is essential.

How proxy servers can be used or associated with Cache server

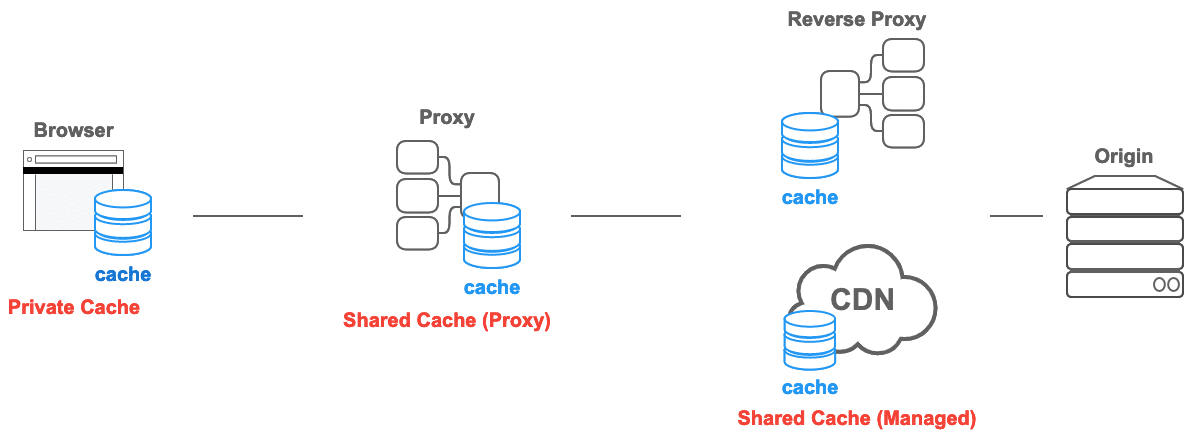

Proxy servers and cache servers are often used in conjunction to enhance web performance, security, and privacy. Proxy servers act as intermediaries between clients and servers, while cache servers store frequently accessed data to speed up retrieval. Combining the two technologies provides several benefits:

- Caching Proxies: Proxy servers can be configured as caching proxies, allowing them to cache content and serve it to clients without contacting the original server repeatedly.

- Load Balancing and Caching: Load balancers distribute client requests across multiple servers, while caching proxies reduce server load by serving cached content.

- Security and Anonymity: Proxy servers can anonymize client requests, and cache servers can store frequently requested resources securely.

Related links

For more information about Cache servers, you can refer to the following resources:

Remember, Cache servers are a fundamental component in modern web architecture, optimizing data retrieval, and improving the overall user experience. By strategically implementing cache servers, websites and applications can achieve faster load times, lower bandwidth usage, and reduce the load on origin servers, ultimately leading to higher user satisfaction and increased efficiency for web service providers.