Auto-regressive models are a class of statistical models widely used in various fields, including natural language processing, time-series analysis, and image generation. These models predict a sequence of values based on previously observed values, making them well-suited for tasks that involve sequential data. Auto-regressive models have proven to be highly effective in generating realistic data and predicting future outcomes.

The history of the origin of Auto-regressive models and the first mention of it

The concept of auto-regression dates back to the early 20th century, with pioneering work done by the British statistician Yule in 1927. However, it was the work of mathematician Norbert Wiener in the 1940s that laid the foundation for modern auto-regressive models. Wiener’s research on stochastic processes and prediction laid the groundwork for the development of auto-regressive models as we know them today.

The term “auto-regressive” was first introduced in the field of economics by Ragnar Frisch in the late 1920s. Frisch used this term to describe a model that regresses a variable against its own lagged values, thereby capturing the dependence of a variable on its own past.

Auto-Regressive Models: Detailed Information

Auto-regressive (AR) models are essential tools in time-series analysis, utilized to forecast future values based on historical data. These models assume that past values influence current and future values in a linear manner. They are widely used in economics, finance, weather forecasting, and various other fields where time-series data is prevalent.

Mathematical Representation

An auto-regressive model of order (AR(p)) is mathematically expressed as:

Where:

- is the value of the series at time .

- are the coefficients of the model.

- are the past values of the series.

- is the error term at time , typically assumed to be white noise with a mean of zero and constant variance.

Determining the Order (p)

The order of an AR model is crucial as it determines the number of past observations to include in the model. The choice of involves a trade-off:

- Lower order models (small ) may fail to capture all relevant patterns in the data, leading to underfitting.

- Higher order models (large ) can capture more complex patterns but risk overfitting, where the model describes random noise instead of the underlying process.

Common methods to determine the optimal order include:

- Partial Autocorrelation Function (PACF): Identifies the significant lags that should be included.

- Information Criteria: Criteria like the Akaike Information Criterion (AIC) and the Bayesian Information Criterion (BIC) balance model fit and complexity to choose an appropriate .

Model Estimation

Estimating the parameters involves fitting the model to historical data. This can be done using techniques such as:

- Least Squares Estimation: Minimizes the sum of squared errors between the observed and predicted values.

- Maximum Likelihood Estimation: Finds the parameters that maximize the likelihood of observing the given data.

Model Diagnostics

After fitting an AR model, it is essential to evaluate its adequacy. Key diagnostic checks include:

- Residual Analysis: Ensures that residuals (errors) resemble white noise, indicating no patterns left unexplained by the model.

- Ljung-Box Test: Assesses whether any of the autocorrelations of the residuals are significantly different from zero.

Applications

AR models are versatile and find applications in various domains:

- Economics and Finance: Forecasting stock prices, interest rates, and economic indicators.

- Weather Forecasting: Predicting temperature and precipitation patterns.

- Engineering: Signal processing and control systems.

- Biostatistics: Modeling biological time-series data.

Advantages and Limitations

Advantages:

- Simplicity and ease of implementation.

- Clear interpretation of parameters.

- Effective for short-term forecasting.

Limitations:

- Assumes linear relationships.

- Can be inadequate for data with strong seasonality or non-linear patterns.

- Sensitive to the choice of order .

Example

Consider an AR(2) model (order 2) for time series data: Here, the value at time depends on the values at the previous two time points, with coefficients 0.5 and 0.2 respectively.

Analysis of the key features of Auto-regressive models

Auto-regressive models offer several key features that make them valuable for various applications:

- Sequence Prediction: Auto-regressive models excel at predicting future values in a time-ordered sequence, making them ideal for time-series forecasting.

- Generative Capabilities: These models can generate new data samples that resemble the training data, making them useful for data augmentation and creative tasks like text and image generation.

- Flexibility: Auto-regressive models can accommodate different data types and are not limited to a specific domain, allowing their application in various fields.

- Interpretability: The simplicity of the model’s structure allows for easy interpretation of its parameters and predictions.

- Adaptability: Auto-regressive models can adapt to changing data patterns and incorporate new information over time.

Types of Auto-regressive models

Auto-regressive models come in various forms, each with its own specific characteristics. The main types of auto-regressive models include:

- Moving Average Auto-regressive models (ARMA): Combines auto-regression and moving average components to account for both the present and past errors.

- Auto-regressive Integrated Moving Average models (ARIMA): Extends ARMA by incorporating differencing to achieve stationarity in non-stationary time-series data.

- Seasonal Auto-regressive Integrated Moving Average models (SARIMA): A seasonal version of ARIMA, suitable for time-series data with seasonal patterns.

- Vector Auto-regressive models (VAR): A multivariate extension of auto-regressive models, used when multiple variables influence each other.

- Long Short-Term Memory (LSTM) networks: A type of recurrent neural network that can capture long-range dependencies in sequential data, often used in natural language processing and speech recognition tasks.

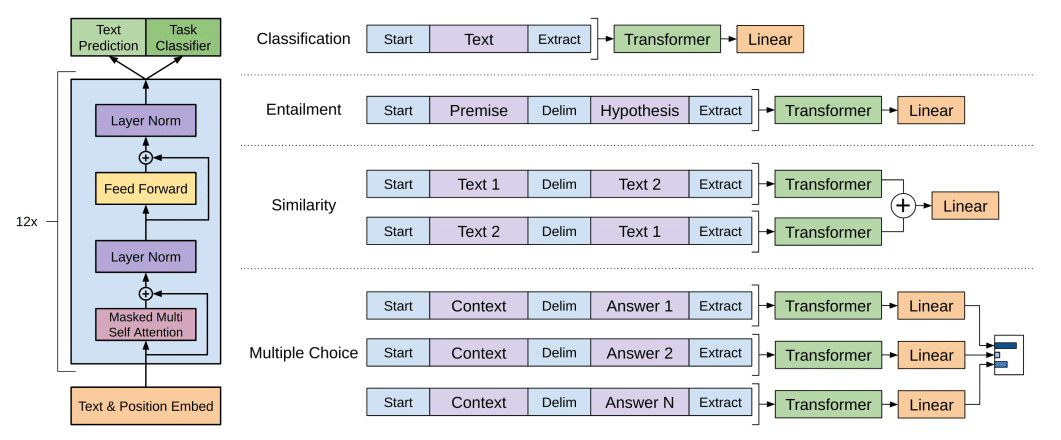

- Transformer models: A type of neural network architecture that uses attention mechanisms to process sequential data, known for its success in language translation and text generation.

Here’s a comparison table summarizing the main characteristics of these auto-regressive models:

| Model | Key Features | Application |

|---|---|---|

| ARMA | Auto-regression, Moving Average | Time-series forecasting |

| ARIMA | Auto-regression, Integrated, Moving Average | Financial data, economic trends |

| SARIMA | Seasonal Auto-regression, Integrated, Moving Average | Climate data, seasonal patterns |

| VAR | Multivariate, Auto-regression | Macroeconomic modeling |

| LSTM | Recurrent Neural Network | Natural Language Processing |

| Transformer | Attention Mechanism, Parallel Processing | Text Generation, Translation |

Auto-regressive models find applications in a wide range of fields:

- Time-Series Forecasting: Predicting stock prices, weather patterns, or website traffic.

- Natural Language Processing: Text generation, language translation, sentiment analysis.

- Image Generation: Creating realistic images using Generative Adversarial Networks (GANs).

- Music Composition: Generating new musical sequences and compositions.

- Anomaly Detection: Identifying outliers in time-series data.

Despite their strengths, auto-regressive models do have some limitations:

- Short-term Memory: They may struggle to capture long-range dependencies in data.

- Overfitting: High-order auto-regressive models may overfit to noise in the data.

- Data Stationarity: ARIMA-type models require stationary data, which can be challenging to achieve in practice.

To address these challenges, researchers have proposed various solutions:

- Recurrent Neural Networks (RNNs): They provide better long-term memory capabilities.

- Regularization Techniques: Used to prevent overfitting in high-order models.

- Seasonal Differencing: For achieving data stationarity in seasonal data.

- Attention Mechanisms: Improve long-range dependency handling in Transformer models.

Main characteristics and other comparisons with similar terms

Auto-regressive models are often compared with other time-series models, such as:

- Moving Average (MA) models: Focus solely on the relationship between the present value and past errors, whereas auto-regressive models consider the past values of the variable.

- Auto-regressive Moving Average (ARMA) models: Combine the auto-regressive and moving average components, offering a more comprehensive approach to modeling time-series data.

- Auto-regressive Integrated Moving Average (ARIMA) models: Incorporate differencing to achieve stationarity in non-stationary time-series data.

Here’s a comparison table highlighting the main differences between these time-series models:

| Model | Key Features | Application |

|---|---|---|

| Auto-regressive (AR) | Regression against past values | Time-series forecasting |

| Moving Average (MA) | Regression against past errors | Noise filtering |

| Auto-regressive Moving Average (ARMA) | Combination of AR and MA components | Time-series forecasting, Noise filtering |

| Auto-regressive Integrated Moving Average (ARIMA) | Differencing for stationarity | Financial data, economic trends |

Auto-regressive models continue to evolve, driven by advancements in deep learning and natural language processing. The future of auto-regressive models is likely to involve:

- More Complex Architectures: Researchers will explore more intricate network structures and combinations of auto-regressive models with other architectures like Transformers and LSTMs.

- Attention Mechanisms: Attention mechanisms will be refined to enhance long-range dependencies in sequential data.

- Efficient Training: Efforts will be made to reduce the computational requirements for training large-scale auto-regressive models.

- Unsupervised Learning: Auto-regressive models will be used for unsupervised learning tasks, such as anomaly detection and representation learning.

How proxy servers can be used or associated with Auto-regressive models

Proxy servers can play a significant role in improving the performance of auto-regressive models, particularly in certain applications:

- Data Collection: When gathering training data for auto-regressive models, proxy servers can be used to anonymize and diversify data sources, ensuring a more comprehensive representation of the data distribution.

- Data Augmentation: Proxy servers enable the generation of additional data points by accessing different online sources and simulating various user interactions, which helps in improving the model’s generalization.

- Load Balancing: In large-scale applications, proxy servers can distribute the inference load across multiple servers, ensuring efficient and scalable deployment of auto-regressive models.

- Privacy and Security: Proxy servers act as intermediaries between clients and servers, providing an additional layer of security and privacy for sensitive applications using auto-regressive models.

Related links

For more information on Auto-regressive models, you can explore the following resources:

- Time Series Analysis: Forecasting and Control by George Box and Gwilym Jenkins

- Long Short-Term Memory (LSTM) Networks

- The Illustrated Transformer by Jay Alammar

- An Introduction to Time Series Analysis and Forecasting in Python

Auto-regressive models have become a fundamental tool for various data-related tasks, enabling accurate predictions and realistic data generation. As research in this field progresses, we can expect even more advanced and efficient models to emerge, revolutionizing the way we handle sequential data in the future.