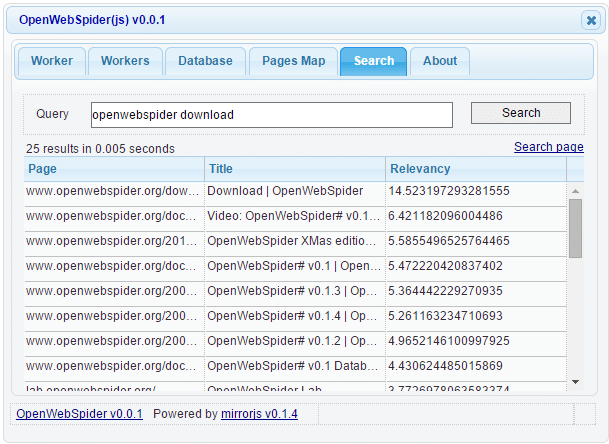

What is OpenWebSpider?

OpenWebSpider is an open-source web scraping tool that is designed to crawl websites and extract relevant data. It’s written in C#, and its functionalities include URL discovery, text extraction, link-following, and a host of other features tailored to gather information from the web. OpenWebSpider is highly customizable, allowing users to set parameters like crawl depth, types of files to download, and website domains to focus on.

What is OpenWebSpider Used for and How Does it Work?

OpenWebSpider is predominantly used for data extraction, search engine indexing, SEO audits, and web research. It can scan through a website to:

- Extract text data

- Identify internal and external links

- Download multimedia files

- Collect meta tags and keywords

- Generate sitemaps

Working Mechanism

- Seed URL: The user specifies the initial URL(s) for OpenWebSpider to start from.

- Crawl Depth: The user sets how many layers deep the spider should go.

- Filtering Rules: Include or exclude specific types of content and domains.

- Data Extraction: OpenWebSpider scans the HTML, XML, and other web formats to collect information.

- Data Storage: The extracted data is stored in databases or files for further analysis or use.

| Component | Description |

|---|---|

| Scheduler | Manages the crawling tasks |

| URL Frontier | Handles the queue of URLs to be visited |

| Web Fetcher | Downloads the web pages |

| Data Extractor | Extracts relevant data based on user-defined specifications |

Why Do You Need a Proxy for OpenWebSpider?

A proxy server acts as an intermediary between OpenWebSpider and the website being scraped, providing anonymity, security, and efficiency. Here’s why it’s essential:

- Anonymity: Scraping frequently from the same IP address may lead to IP bans. Proxies provide multiple IP addresses to cycle through.

- Rate Limiting: Websites often restrict the number of requests from a single IP. Proxies can distribute these requests across multiple IPs.

- Geographical Restrictions: Some websites have location-based content. A proxy can bypass these restrictions.

- Data Accuracy: Using proxies ensures that you’re not receiving cloaked information, which some websites display to scrapers.

- Concurrent Requests: With a proxy network, you can make multiple simultaneous requests, thereby speeding up the data collection process.

Advantages of Using a Proxy with OpenWebSpider

- Reduced Chance of IP Ban: Rotate through multiple IPs to mitigate the risk of getting blacklisted.

- Higher Success Rate: Access restricted or rate-limited pages more effectively.

- Enhanced Speed: Distribute requests through multiple servers for faster data collection.

- Better Data Quality: Access a broader scope of information without geographical limitations or cloaking.

- Security: Encrypted proxy servers offer an added layer of security.

What are the Сons of Using Free Proxies for OpenWebSpider

- Reliability: Free proxies are often unreliable and can abruptly stop working.

- Speed: Overcrowding on free proxy servers results in slow data retrieval.

- Data Integrity: Risk of data interception or manipulation.

- Limited Geolocation Options: Fewer options for specifying geographical locations.

- Legal Risks: Free proxies might not comply with scraping laws, putting you at legal risk.

What Are the Best Proxies for OpenWebSpider?

For a seamless OpenWebSpider experience, OneProxy’s data center proxy servers offer:

- High Uptime: Close to 99.9% uptime for continuous scraping.

- Speed: With high bandwidth, get your scraping jobs done quicker.

- Security: SSL encryption to ensure the data you collect remains confidential.

- Global Coverage: Wide range of IP addresses from various geographical locations.

- Customer Support: 24/7 support for any troubleshooting.

How to Configure a Proxy Server for OpenWebSpider?

- Select Proxy Type: Choose a proxy server from OneProxy that fits your requirements.

- Authentication: Secure your proxy with credentials.

- Integration: Input the proxy details into OpenWebSpider’s settings (usually found in a config file or UI).

- Test: Run a test scrape to ensure the proxy server is working seamlessly with OpenWebSpider.

- Monitoring: Frequently check the logs to ensure everything runs smoothly.

Configuring a proxy server from OneProxy ensures you get the best out of your OpenWebSpider web scraping tasks. With the right setup, you can easily navigate through the complexities of modern-day web scraping challenges.